How I Built a Developer Digital Twin with Agentic AI (And What It Got Right & Wrong)

July 15, 2025

AI tools have already changed how we write software—autocomplete, code suggestions, and natural language prompts are now part of the daily workflow.

Using AI to streamline software development is only accelerating. What started from non-AI-backed, basic IntelliSense/autocomplete has quickly evolved to browser-based AI prompts and having AI built into an IDE. It’s been an astounding shift—from passive helpers to intelligent software copilots—and developers using these tools are seeing serious productivity gains.

So what’s next?

What if your tools evolved copilots into something even more capable, an autonomous partner?

What if you had a digital version of yourself… your own digital twin who mirrors how you develop software?

In this post, I’ll walk you through what I believe is the next leap in software developer productivity: building a digital developer twin—an intelligent agent embedded in your IDE that mimics your development workflow. I’ll show you how I tested this concept using Windsurf and a user story from a real project.

If you’ve ever dreamed of focusing on the hard problems while an AI assistant handled the boilerplate code, this one’s for you.

What Is a Developer’s Digital Twin?

Unlike traditional AI suggestions that require constant human prompting, a digital twin operates with more independence, enabling semi-automated software development.

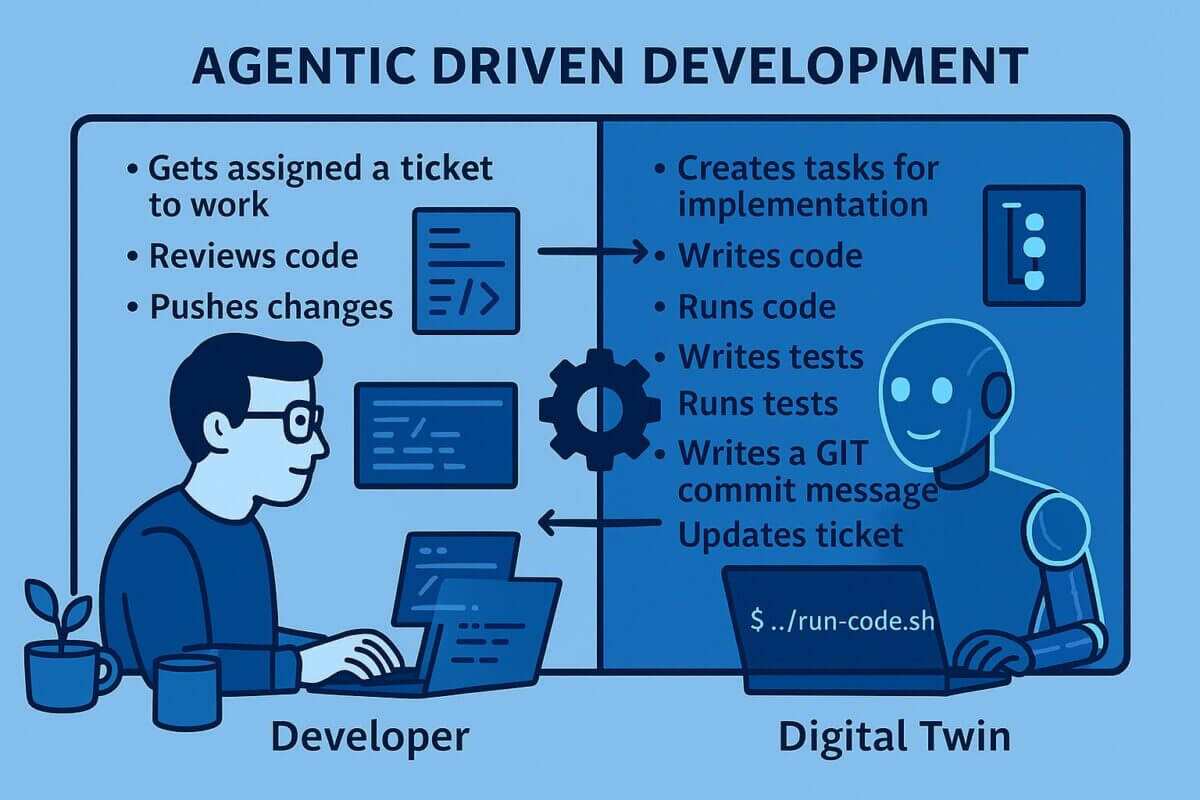

How Developers Work Today (And Where Agentic AI Fits In)

First, let’s review the basic process a developer goes through, representing a lot of development shops.

- The developer (and not necessarily in a linear manner or all encompassing)

- Gets assigned a ticket to work

- Creates tasks for implementation

- Writes code

- Runs code

- Writes tests

- Runs tests

- Reviews code

- Writes a GIT commit message as well as updating the ticket information based on changes

- Push code changes

While developers may have tools that assist with coding or testing individually, they still orchestrate each step manually. With Windsurf as my IDE, I use its agent to assist in writing code and tests. But so far, I’m still the one running everything.

Note: I’ve written about developing with Windsurf before. I use Windsurf as my IDE, specifically using the Windsurf agent in the steps of writing code/tests and running code/tests.

That’s where the idea of a digital twin comes in: What if the agent could reason through the full user story and act autonomously? I’m currently manually prompting it to assist, and I’m also manually performing the rest of the process on my own. What if I could create an agentic digital twin that would automatically perform those additional steps? I think we are almost there.

Why User Stories and Feature Files Are Ideal Inputs

The anatomy of a user story is already great for a model to reason over; it maps well. It contains the who, what, and why, along with the conditions of satisfaction. Just like a developer, a model can reason about what needs to be done to implement the user story, much like a developer.

What about the tests? The generated code needs to be tested before it can be pushed and deployed.

I’m also a big fan of behavioral testing, so this is where something like Cucumber’s feature files comes in nicely. Like a user story, everything we need is already described as features, scenarios, and steps. I’m not trying to skip over unit tests, but those are easy enough to add to the process without going into detail.

The importance of the Three Amigos becomes even more visible in this process. As it was before—and magnified with an agentic twin—the quality of the user story and test scenarios is a key piece.

The Demo Setup

To test this idea, I used my open-source electronics store, where our team was in the early stages of implementing new features.

The project is designed to be atomic—self-contained, reproducible, and simple to clone and run using an agent of your choice. This makes it easy to test digital twin workflows without needing a complex local setup.

Project Tech Stack

To give context for how the digital twin interacted with the codebase, here’s a quick look at the stack I used:

Backend

- Java Spring Boot

- Spring Security for authentication and authorization

- Spring Data JPA for database access

- H2 Database (for development)

- JUnit and Spring Test for integration testing

Frontend

- React with TypeScript

- Redux for state management

- Material-UI for component library

- React Hook Form for form validation

- Yup for schema validation

- Axios for API requests

This modern full-stack setup provided a clean separation of concerns and allowed the agent to reason across both frontend and backend layers effectively.

Application Core Functionality

Understanding the features implemented in the demo app helps frame how the digital twin interacts with a real, working system. Here’s a snapshot of the core functionality:

User Features

- User registration and login

- Authentication with role-based access control

- User profile creation and editing

- Add/remove items from the shopping cart

- View product listings and details

Admin Features

- Add, update, or delete products

- Manage registered users

- View order and user activity

These common ecommerce features give the digital twin a realistic and structured environment to work within—making the test scenario ideal for modeling typical enterprise workflows.

Adding User Registration Functionality with Agentic AI

My task: add user registration. The goal? See how far my digital twin could take it with a single, well-crafted prompt.

Key input files:

RegisterUserStory.txt: defines the user story, describing the user registration featureRegistrationAPITests.feature: outlines expected behaviors and behavioral testsUserStoryExpanded.prompt.md: contains instructions written in natural language that guide the AI on how to use the inputs

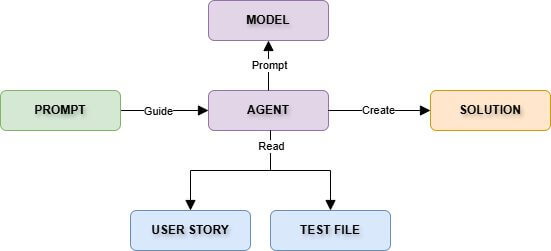

The user story is defined in RegisterUserStory.txt, and the behavioral tests are in RegistrationAPITests.feature. As my digital twin, the AI agent needs to add the contents of those files to the context of the model, along with a prompt to tell the model what to do.

Prompt(file):

I’ve stored the prompt I will provide the agent in UserStoryExpanded.prompt.md.

I want to update the electronics-store with the user story in /userstory/RegisterUserStory.txt and keep the same theme style and create an output file in /userstory/RegisterUserStoryOutput.txt to add a summary of what changed and why

The prompt guides the agent to:

- Use the user story for implementation

- Verify expected behavior via the feature file

- Summarize what changed for use in a Git commit message

Instead of the developer being directly involved in the steps listed above, the digital twin does most of the work while the developer handles:

- Gets assigned a ticket to work

Creates tasks for implementation

Writes code

Runs Code

Writes tests

Runs tests - Reviews Code

Writes a GIT commit message as well as updating the ticket information based on changes - Pushes code changes

This demo represents a concrete step toward what agentic software development could look like in the near future.

The Outcome

What the Agent Did Well

So, how did the digital twin do? In this example, the digital twin performed quite well. With just one prompt, the agentic AI persona:

- Implemented the registration functionality

- Created a basic registration form with validation

- Built a user profile page

- Generated behavioral tests matching the feature scenarios

The agent’s ability to handle React + Spring Boot in tandem—including the creation of forms with React Hook Form and validation with Yup, alongside backend API integration and Spring Security roles—showed that agentic workflows can span across the full stack when the context is well-defined.

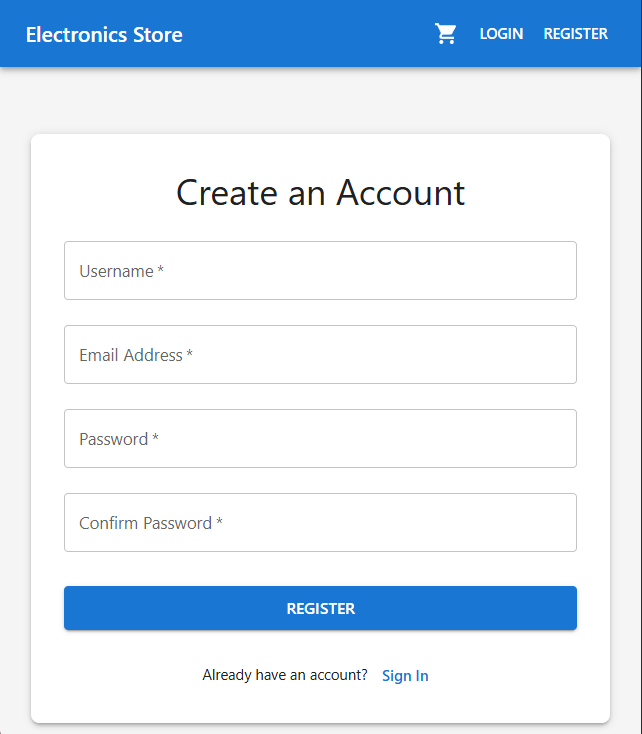

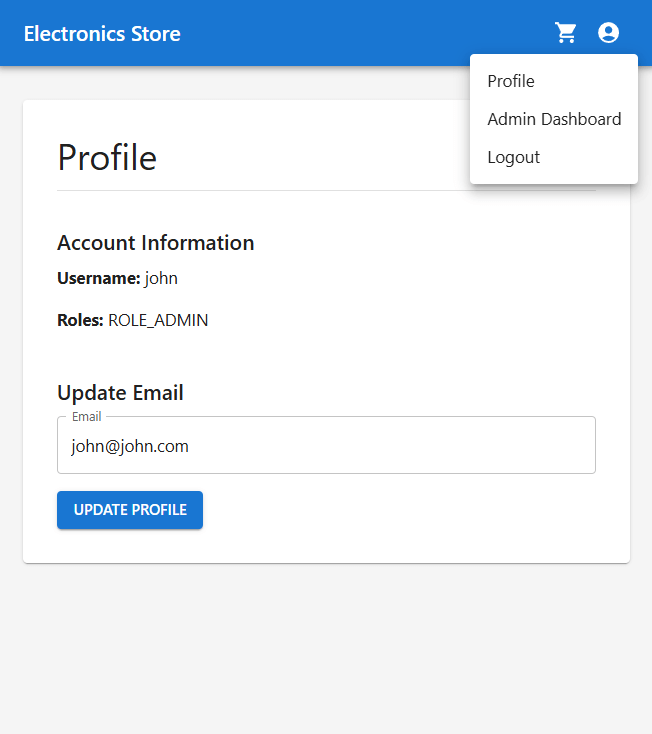

User Story Implementation

Aside from needing a nudge to stop changing the theme, the agent did a solid job implementing the user story. It generated a working registration form with validation (screenshot 1) and a basic user profile page (screenshot 2). I could register, log in, and see my profile.

Other than telling it to stop trying to change the theme, it properly implemented the user story. I’m not sure why, but the agent also wanted to change themes on other projects.

The first screenshot shows the new registration form, along with input validation. A basic profile page was also created, as seen in the second screenshot. I can now register on the website, log in, and create a profile.

Screenshot 1

Screenshot 2

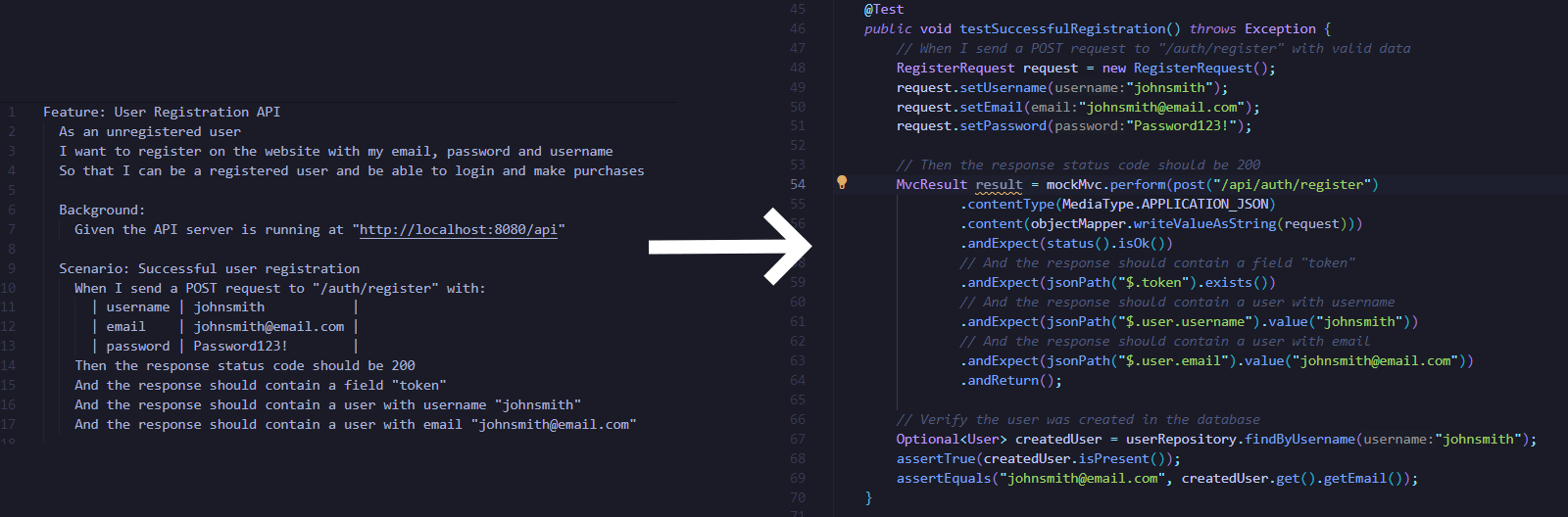

Feature Scenario to Test Comparison

The digital twin also did well on creating the behavioral tests from the feature file. The two screenshots below (bigger one and two) show how it took all the details from the scenario to create the test.

Feature scenario to test:

This demonstrated its ability to map plain-language test cases to executable test code.

While the agent did a good job creating tests based on the feature file, not all tests worked. While repeating the steps to verify that the agent was consistent in its implementation of the user story, one or two tests consistently failed in the test suite. The agent does have issues while initializing complex tests.

For example, it should have known that the first user registered is always assigned the admin role (more about that later). The test failed because it was assuming the first user as having the customer role.

Developer-in-the-loop

Even with the brilliance of the digital twin, the original twin (i.e., you, the developer) is still needed for code reviews.

For example, the following code was created before any tests were written. The digital twin implemented the assumption that the first user created should have the admin role.

// For testing purposes, create an admin user if this is the first user

if (userRepository.count() == 1) {

user.setRoles(new HashSet<>(Collections.singletonList("ROLE_ADMIN")));

user = userRepository.save(user);

}

You probably don’t want code like that going into production.

This is just one example—there are others you’ll spot throughout the code. It’s a clear reminder that even in AI-assisted development, real developers are essential for reviewing assumptions and maintaining code quality.

Where The AI Agent Struggled – A Recap

While the digital developer twin / Agentic AI successfully generated functional code and tests, it wasn’t perfect:

- It made incorrect assumptions, like auto-assigning admin roles to the first registered user.

- A few behavioral tests failed due to missing business context and logic gaps.

- It occasionally tried to change unrelated themes in other modules.

These highlight why a developer-in-the-loop is still essential. AI may handle 80% of the work as an autonomous coding assistant, but critical thinking, validation, and experience remain irreplaceable. This is something we have seen repeatedly in our enterprise AI consulting services.

Performance Insights

As I mentioned before, I re-ran the full process multiple times.

In the first 5 tries, I didn’t ask for generating tests in the prompt. Analyzing the existing code, the agent determined that only the frontend project needed updating. The backend project already had basic registration API functions to make the frontend work.

- The agent took about 5 – 10 minutes to complete the process.

By asking for tests in the prompt to verify behavior, the agent determined that the API needed to be more robust for handling unhappy paths. It shows the agent can be lazy.

- Adding tests to the process, the agent took about 10 – 15 minutes to complete the process.

Important to note: my digital twin used Claude-Sonnet 3.7 Thinking; other models may have different results.

Key Agentic Development Takeaways

- Agentic development is more than just code suggestions—it’s automated, context-aware coding.

- User stories + behavioral tests = ideal ingredients for an autonomous twin.

- Even with automation, the developer-in-the-loop is essential for validation and quality control.

What’s Next?

My next steps involve connecting this workflow to a service that pulls user stories and test specs remotely, so everything doesn’t have to live locally.

If you’re experimenting with agentic development or digital twin tooling, let me know what IDEs, agents, and models you’re using—I’d love to compare notes.

Let me know how using other agentic IDEs and models handle working with your digital twin.

Project source: https://github.com/jhoestje/electronics-store

More From John Hoestje

About Keyhole Software

Expert team of software developer consultants solving complex software challenges for U.S. clients.