Long-Running Workflows Made Simple with C# + Azure Durable Functions

August 12, 2025

It’s common to face challenges around long-running workflows, state management, and resiliency when you’re building cloud-native applications. Traditional approaches like background services or message queues might work sometimes, but they often require a lot of “glue code” and custom retry logic. This entails not only more work up-front for the developer, but also more maintenance in the long run.

That’s where Azure Durable Functions come in.

In this blog, I’m going to walk you through how to build and run a simple order processing workflow in C# using Azure Durable Functions, providing examples of the following:

- The Orchestrator function

- Activity functions

- Durable timers

- Error handling and retries

The advantages of durable functions are several. They manage checkpoints and states between executions, alleviating the need for custom state logic. They also support long-running workflows, from minutes up to days. Durable workflows are defined in standard C# code, making them easy to learn.

What Are Azure Durable Functions?

Durable Functions are an extension of Azure Functions that let you write stateful workflows in a serverless way. Under the hood, they use event sourcing and checkpoints to maintain the state across replays, with no infrastructure management needed.

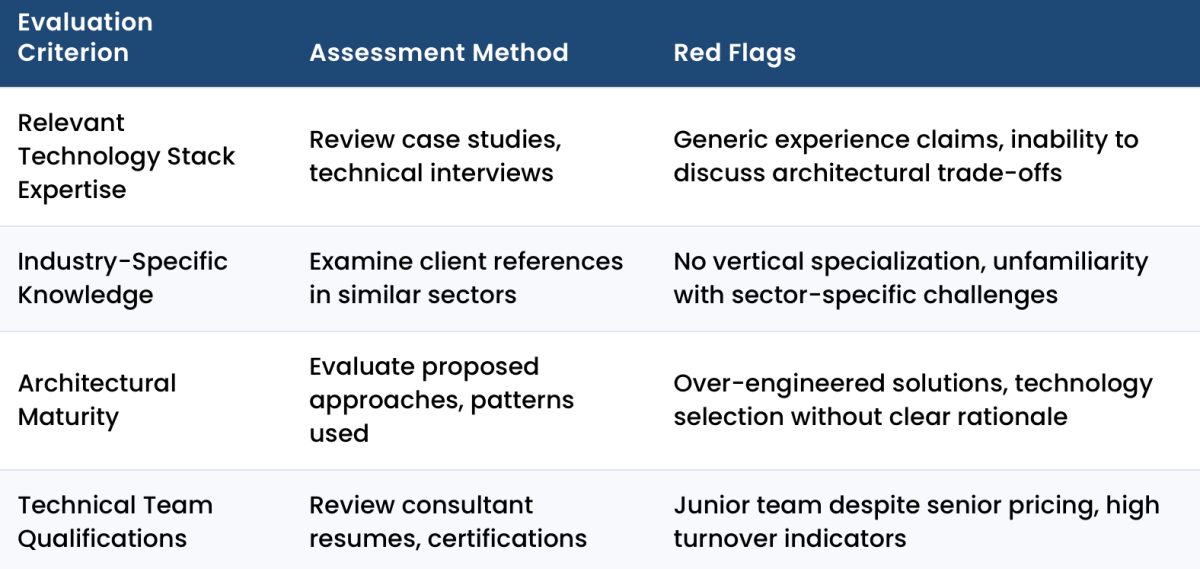

Durable Functions consist of Orchestrator, Activity, and Client Functions. Each function type has a specific role in the overall workflow:

- Orchestrator Functions: Define the workflow (must be deterministic)

- Activity Functions: Perform the actual logical work (e.g., API calls, DB writes)

- Client Functions: Start the orchestrations

Whenever an Activity Function is scheduled, the Azure Durable Task Framework “checkpoints” the execution state of the function into a durable storage backend (Azure Table storage by default). This state is what’s referred to as the orchestration history.

Activity Functions are invoked by the Orchestrator and perform discrete units of work. They are stateless and can be retried independently in case of failure. In C#, these functions use the [ActivityTrigger] attribute to bind input parameters. Behind the scenes, Azure Durable Functions poll the configured storage backend (like Azure Table Storage) for new activity execution events.

Client functions, such as those with the [DurableClient] annotation, initiate the orchestration of long-running processes. They are triggered by external events, such as HTTP requests, queue messages, or timers. They interact with the Durable function API to start, query, or terminate orchestrations.

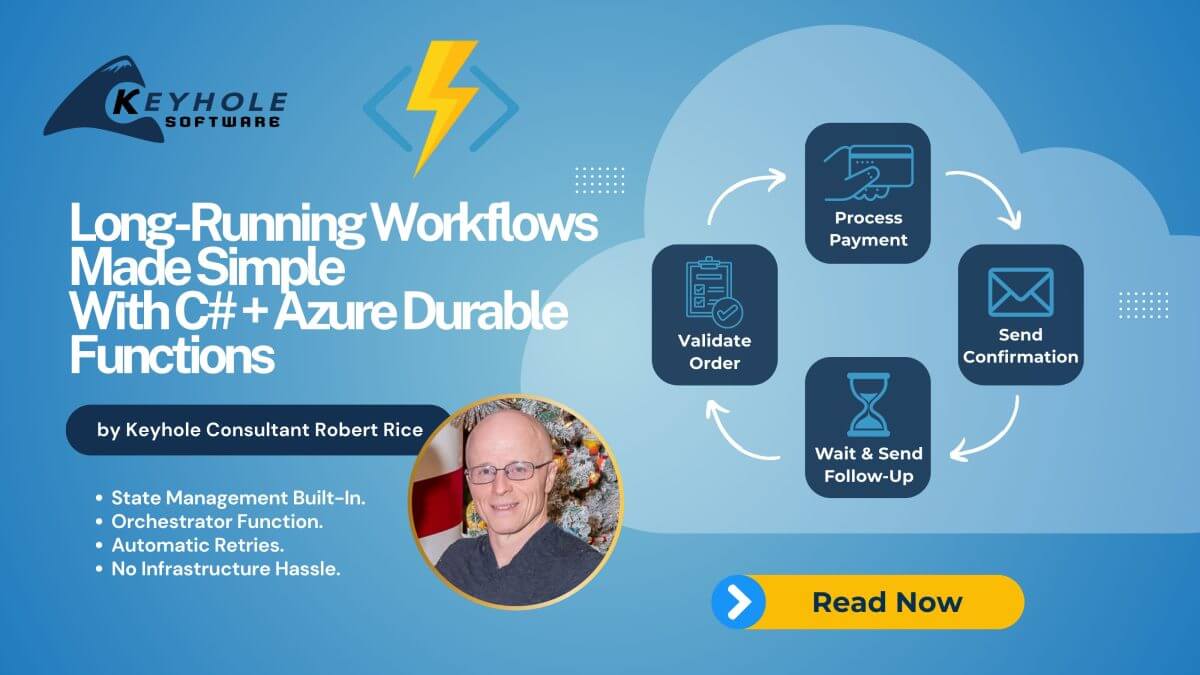

Use Case: Order Processing Workflow

Now that we’ve covered what Azure Durable Functions entail, I think a real-world use case is in order. Let’s say we want to model a workflow like this:

- Validate the order

- Process the payment

- Send confirmation

- Wait 2 minutes before sending a follow-up email

Let me show you how to implement this in C#.

Step 1: Set Up the Project

You can scaffold a Durable Function app using the Azure Functions template, either in Visual Studio or via the CLI. Below is an example using the CLI in Bash:

func init DurableOrderApp --worker-runtime dotnetIsolated cd DurableOrderApp func new --template "DurableFunctionsOrchestration" --name OrderOrchestrator

Step 2: Create the Activity Functions

Next, define your Activity Functions. As a reminder, these are the discrete, reusable units of work that the Orchestrator will call in sequence. Each one is decorated with the [Function] and [ActivityTrigger] attributes to register it with the Durable Functions runtime. In this example, the activities simulate order validation, payment processing, and customer communication.

public static class OrderActivities

{

[Function("ValidateOrder")]

public static string ValidateOrder([ActivityTrigger] string orderId)

{

// Simulate validation

return $"Order {orderId} validated.";

}

[Function("ProcessPayment")]

public static string ProcessPayment([ActivityTrigger] string orderId)

{

return $"Payment processed for order {orderId}.";

}

[Function("SendConfirmation")]

public static string SendConfirmation([ActivityTrigger] string orderId)

{

return $"Confirmation email sent for order {orderId}.";

}

[Function("SendFollowUp")]

public static string SendFollowUp([ActivityTrigger] string orderId)

{

return $"Follow-up email sent for order {orderId}.";

}

}

Step 3: Implement the Orchestrator Function

The orchestration trigger enables you to author durable orchestrator functions. This trigger executes when a new orchestration instance is scheduled and when an existing orchestration instance receives an event. Examples of events that can trigger orchestrator functions include durable timer expirations, activity function responses, and events raised by external clients.

When you author functions in .NET, the orchestration trigger is configured using the OrchestrationTriggerAttribute .NET attribute [OrchestrationTrigger]. Internally, this trigger binding polls the configured durable store for new orchestration events, such as orchestration start events, durable timer expiration events, activity function response events, and external events raised by other functions.

[Function("OrderOrchestrator")]

public static async Task<List<string>> RunOrchestrator(

[OrchestrationTrigger] TaskOrchestrationContext context)

{

var outputs = new List<string>();

string orderId = context.GetInput<string>();

outputs.Add(await context.CallActivityAsync<string>("ValidateOrder", orderId));

outputs.Add(await context.CallActivityAsync<string>("ProcessPayment", orderId));

outputs.Add(await context.CallActivityAsync<string>("SendConfirmation", orderId));

// Wait for 2 minutes before sending follow-up

var nextCheck = context.CurrentUtcDateTime.AddMinutes(2);

await context.CreateTimer(nextCheck, CancellationToken.None);

outputs.Add(await context.CallActivityAsync<string>("SendFollowUp", orderId));

return outputs;

}

Step 4: Trigger the Workflow

With your orchestrator and activity functions in place, the next step is to start the orchestration. An HTTP-triggered client function is a common way to do this, allowing external callers—such as web applications, APIs, or testing tools—to initiate the workflow.

The [DurableClient] attribute provides a DurableTaskClient instance, which you can use to start, query, terminate, or send events to orchestration instances. In this example, the function reads the orderId from the request body and uses ScheduleNewOrchestrationInstanceAsync() to launch the OrderOrchestrator with that input. The returned instance ID can be used later to check the orchestration’s status.

[Function("StartOrderWorkflow")]

public static async Task<HttpResponseData> Run(

[HttpTrigger(AuthorizationLevel.Function, "post")] HttpRequestData req,

[DurableClient] DurableTaskClient client)

{

string orderId = await req.ReadAsStringAsync();

string instanceId = await client.ScheduleNewOrchestrationInstanceAsync(

"OrderOrchestrator", orderId);

return req.CreateResponse(HttpStatusCode.OK, $"Started orchestration: {instanceId}");

}

Testing It Out

Run the project locally with func start to start your functions host. Send a POST request with an order ID to http://localhost:7071/api/StartOrderWorkflow to trigger the orchestration. You can then watch the workflow’s progress in the console output or view its status using Durable Functions monitoring tools in the Azure Portal.

Retry and Error Handling

Being able to retry operations that have failed is a significant advantage of durable functions. You can easily add retry logic. For example:

var retryOptions = new RetryOptions(TimeSpan.FromSeconds(5), maxNumberOfAttempts: 3);

outputs.Add(await context.CallActivityWithRetryAsync<string>("ProcessPayment", retryOptions, orderId));

Conclusion

Durable Functions in C# offer a powerful way to implement long-running, stateful workflows without the complexity of traditional solutions like custom background services or intricate message queue setups. By leveraging orchestrator functions, activity functions, durable timers, and built-in error handling, you can create processes that are both reliable and maintainable, whether they run for seconds, hours, or even days.

In the example we walked through, we built a simple order processing workflow, but the same patterns can be applied to many scenarios: approval chains that require multiple human interactions, scheduled follow-ups and reminders, data processing pipelines, and more. Because Durable Functions handle state checkpoints and retries automatically, you spend less time worrying about infrastructure details and more time focusing on business logic.

If you’re developing cloud-native applications that demand resiliency, scalability, and clear orchestration of tasks, Durable Functions are a strong option to consider. They give you the flexibility of plain C# code with the reliability of a managed workflow engine, making it easier to deliver solutions that stand the test of time.

More From Robert Rice

About Keyhole Software

Expert team of software developer consultants solving complex software challenges for U.S. clients.